Disclaimer: I’m sharing my experience below without making any claims. I’m simply sharing my observations in hopes the SEO industry might be able to further test with me. Remember, correlation does not imply causation.

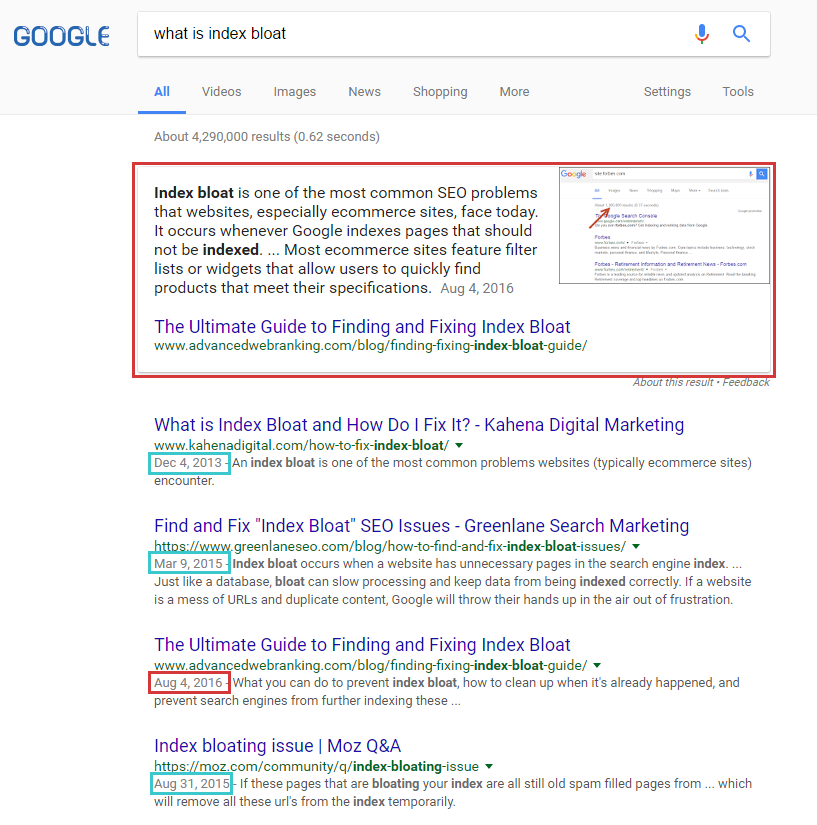

I’m fascinated by Answer Boxes. You know, these things:

While Answer Boxes go back several years, they have become more prevalent in recent years. I read several great posts last year on what characteristics correlate with receiving this “gift” from Google. To name a few:

- Ranking #0: SEO for Answers by Dr. Pete

- Whitepaper: How to Get More Featured Snippets by STAT

- How to Optimize Your Content for Google’s Featured Snippet Box by Matthew Barby

- Optimizing for Google’s Quick Answer Box by Builtvisible

Why do I call it a gift? Despite initial fears that this feature would reduce the need to click through on search results, Answer Boxes often seem to drive traffic when attached to a strong-performing keyword. I’ve seen it firsthand with our clients, as well as noticing my own behavior when I see one as a Google user. I click them all the time (depending on the answer, of course).

Google looks at several signals and presumably assigns a weight and bias to each (further substantiated by a recently published patent). The web page with the most proper scores in each category, when rolled up, receives the coveted Answer Box. It’s like the recipe for brewing an IPA — grain, hops, yeast, water and sugar must be present. If yeast is lacking, your beer has no chance. If hops are lacking, you have a lower shot at being a highly ranked beer. So the Answer Box may just be finding the optimal mix — at least, the mix that is the best among the others ranking on the first page.

I constantly experiment with the my company website, www.greenlaneseo.com. So, I dug for keyword phrases (via SEMrush) that pull up Answer Boxes and discovered that one of our blog posts could be a consideration:

I wrote a post a while back about index bloat, and how to manage it. However, Google had chosen Advanced Web Rankings’ post for its Answer Box. I became jealous.

As a test, using all the material I studied from the above posts, I wanted to see if I could steal this Answer Box. It’s a “paragraph” Answer Box, so I approached it using what the SEO industry has collectively learned about these specific types of answers. Enjoy my process!

1. First attempt: The basics

While Google doesn’t require the on-page content to be in question-and-answer form, it sure seems to be correlated with Answer Box placement. So, I made sure the question, “What is index bloat?” was properly posed and answered on our web page.

My writing style is not always the most concise, so I tightened up the lead paragraph to attract Google (hopefully without ruining its human value). I made the leading paragraph a stronger, more direct answer in hopes to influence Google.

I also went full-in on the use of a header tag. I made an H1 that matched the target keyword phrase, “what is index bloat.” Additionally, I made sure the content was in a proper <p> HTML format.

Once all these changes had been made, I went to Google Search Console to submit it to the index (Fetch as Google > submit to index).

I waited a few weeks, but no dice. Some have seen changes occur in a matter of hours upon submitting to the index, but I was not that lucky.

2. Second attempt: Natural language analysis

I struck out on my first attempt, so I had to start thinking outside the box.

I started to analyze not only the content that was being used in the Answer Box, but also the whole copy on the victorious Advanced Web Rankings page. Post-Hummingbird, we know Google has raised their ability to understand text outside of keyword presence. So maybe my page was not yet triggering Google’s (still elementary) comprehension? Perhaps Advanced Web Rankings won Google over not because of the actual value of their content, but due to their word selection? Maybe I was giving Google’s comprehension level a bit too much credit.

I decided to run their page through a natural language processor. AlchemyAPI has a tool for that, which resulted in the following output:

In addition to Alchemy, I used Google’s own Natural Language Processor API. I thought that one might have a little more synergy with Google’s decision-making.

(By the way, both tools have free trials on their respective websites.)

I focused heaviest on entities, keywords, concepts and relations. I emulated and implemented what I saw, hoping to enrich my content and appeal more to Google. I found that in doing this, I actually improved the copy. (To be honest, this is a technique I’ve been doing for a while.)

Once I felt the copy was enhanced, I Fetched as Google again and waited. Still no dice. After two weeks, I accepted defeat again.

3. Third attempt: Manufacturing freshness, AKA the long shot

I was still trying to find clues. What else was different between my page and the winning page? Well, the Advanced Web Rankings post was fresher, as judged by the publish date notated in the SERPs. They wrote their post more recently than I wrote mine. If Google used age as a factor (which is feasible), could I trick Google into thinking my article was newer? Could that help?

So, I updated the post’s publish date to be more recent than any of the competing pages. Admittedly, it was pretty hacky, but all in the name of experimenting! Google is now displaying the new date in the SERPs, but they probably know that’s not the true age of the post. No success here, but I really didn’t expect Google to be this naïve. Good on you, Google.

4. Fourth attempt: Title tags, AKA getting desperate

At this point, I felt like I had covered everything suggested in other posts, so I went “old school” with nothing to lose. I tweaked the title tag to match the H1 tag and contain the actual question in full. In this day and age, I’m still surprised to see how powerful the title tag can be sometimes. So, maybe it was a factor that could be the final nudge?

I updated the title tag and once again submitted the new URL to Google Search Console’s Fetch as Google.

In less than 10 minutes, my title tag was live in the SERPs. That’s remarkable! Using Chrome in Incognito mode, I not only saw my new title tag with new (and accurate) cache date, but also saw an improved ranking. I’m always surprised by Fetch as Google — sometimes it’s nearly instant, and sometimes it has virtually no noticeable effect.

A one-spot-better ranking is not what I was after, however; so once again, I had to accept failure. It was starting to feel like my years of internet dating.

5. Fifth attempt: Operation link building

I really didn’t have any more ideas at this stage. Could it have something to do with a signal that hasn’t been well documented or explored? Perhaps the architecture of the domain as a whole? Page speed? Surely not the meta keywords tag!

What about backlinks? That would make some sense — after all, links are a traditional (and often strong) SEO signal. Using Ahrefs, I pulled some link metrics for these pages:

- http://ift.tt/2aT9rAd — 93 backlinks, 8 referring domains

- http://ift.tt/2mw5GpJ — 3 backlinks, 3 referring domains

Yikes. I was outnumbered here. So, I asked some friends to help me with my experiment.

To boost on links, I asked for a simple “whatever you choose” anchor text link directly to the post, on a healthy domain and relevant page. I asked my friends not to click on the results (to weed out user metrics as a factor). I also asked them to Fetch as Google to hurry the experiment along. Thanks to John-Henry Scherck, Ian Howells, Jon Cooper, Nicholas Chimonas, Zeph Snapp, Marie Haynes, Sean Malseed, and James Agate, I received eight links.

(Since I know it’s going to be asked, the links were all on various DAs, with nothing too great and nothing too small. All white-hat sites.)

After about three days following the final link add, something magical happened. The elusive Answer Box finally appeared.

At this point, we need to pause our story. Could it be a coincidence? A small algorithm tweak? Other factors? Most certainly. Remember above where I said correlation does not always imply causation? This is where we need to put that statement into play. Nonetheless, it’s an interestingly timed observation.

In order to get a little closer to the truth, I bugged my same link-granting friends to remove the link. They obliged, and the following resulted about three weeks later. The Answer Card was given back to Advanced Web Rankings.

Putting it all together

I don’t want to spread misinformation into the industry. I’m not saying links are the magic ingredient. This is merely a potential clue worth further investigation.

If Google does rank on several signals and grants Answer Boxes based on a specific mix (e.g., my beer recipe example at the beginning of this post), then perhaps links really are an ingredient we haven’t truly factored yet. It could explain why some sites with more links could still not get the Answer Box, simply because it’s not a priority signal. Possibly it’s just a signal that, in this case, influenced a tie-breaker.

Hopefully, you found this journey of triumph and failures useful, and a path towards winning your own Answer Cards. I’m very interested to see the conversation continue in the comments, and even more interested to see if anyone can improve on these findings.

The post An answer box experiment (my journey into known and unknown factors) appeared first on Search Engine Land.

No hay comentarios:

Publicar un comentario